Google adds new AI accessibility features to Pixel phones and Android

Google on Tuesday introduced new artificial intelligence (AI) accessibility features to Pixel smartphones and Android devices. There are four new features, two of which are exclusive to Pixel smartphones and two of which are available more broadly on Android devices. The features are designed for people with visual impairments, the deaf, and people with speech disabilities. The features include Guided Frame, new AI features in the Magnifier app, and improvements to Live Transcribe and Live Captions.

Google adds AI-powered accessibility features

In a blog postthe tech giant stressed that it is committed to working with the disability community and wants to use new accessibility tools and innovation to make technology more inclusive.

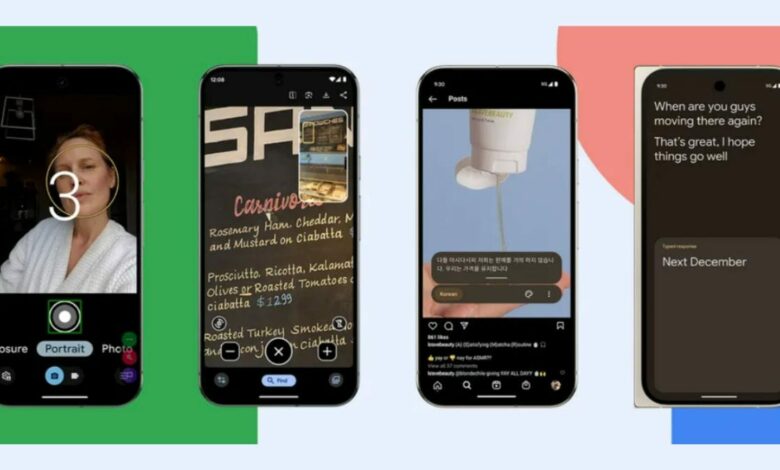

The first feature is called Guided Frame, and it’s exclusive to the Pixel camera. The feature provides users with voice assistance to help them position their face within the frame and find the right camera angle. This feature is intended for people with low vision and vision loss. Google says the feature will prompt users to tilt their face up or down, or pan left or right before the camera automatically takes the shot. It will also tell the user when the lighting isn’t good enough so they can find a better frame.

Previously available through Android’s TalkBack screen reader, the feature is now included in the camera settings.

Another Pixel-specific feature is an upgrade to the Magnifier app. Introduced last year, the app allowed users to zoom in on the real world with their camera to read signs and find menu items. Now, Google has harnessed AI to let users search for specific words in their surroundings.

This allows them to search for information about their flight at the airport or find a specific item in a restaurant as the AI automatically zooms in on the word. Additionally, a picture-in-picture mode has been added that shows the zoomed-out image in a smaller window while the searched word is locked in the larger window. Users can also switch the camera lenses for specific purposes. The app also supports the front camera, so it can be used as a mirror.

Live Transcribe is also getting a new upgrade that’s only supported on foldable phones. In dual-screen mode, it can now show each speaker their own transcripts while the feature is being used. This way, if two people are sitting across from each other at a table, the phone can be placed in the middle and each half of the screen will show what that person has said. Google says this will make it easier for everyone involved to follow the conversation better.

The Live Captions feature is also getting an upgrade. Google has added support for seven new languages to Live Captions: Chinese, Korean, Polish, Portuguese, Russian, Turkish, and Vietnamese. Now, whenever the device plays a sound, users can get real-time captions in these languages as well.

These languages will also be available on-device for Live Transcribe, Google said. This brings the total number of languages to 15. Users no longer need an internet connection to transcribe these languages. However, if they are connected to the internet, the feature will work with 120 languages.