Cloudflare launches bot management tools to let AI bots pay for data scraping

Cloudflare has launched a new set of tools to give website owners and content creators more control over how their content is used by artificial intelligence.

The AI Audit tools are a response to growing concerns that bots are scanning websites without permission or compensation from their creators.

The current effort to block AI bots from Cloudflare follows on from previous work to identify such unwanted scrapers via digital fingerprinting, which is intended to distinguish bots from legitimate web users.

Cloudflare wants to put an end to AI bots

Bots themselves aren’t the problem: those used by search engines can provide value by indexing content and driving traffic to websites. Although AI may make it harder to eliminate just certain types of bots.

AI bots used by large language models often scrape publicly available data to train models without attributing or crediting sources, and without compensating creators. This can lead to creators finding their work, or similarities, in AI-generated responses.

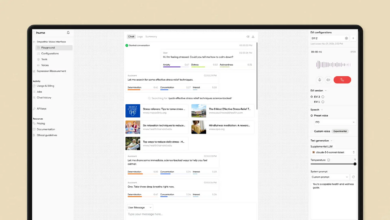

Cloudflare’s AI Audit was built to give website owners detailed analytics that provide transparency into which AI bots are visiting their sites, how often, and on which components. For example, it can distinguish between bots like OpenAI’s GPTBot and Anthropic’s ClaudeBot.

Cloudflare CEO Matthew Prince noted, “Content creators and website owners of all sizes deserve to own and control their content. Without it, the quality of information online will decline or remain exclusively behind paywalls.”

In addition to identifying bots, Cloudflare’s tools also provide controls and custom rules to allow or block AI services, eliminate scraping altogether, or align it with deals website owners may have entered into with certain AI companies.