Microsoft’s new AI tool aims to find and correct AI-generated text that is factually incorrect

Microsoft has unveiled a new tool that can prevent AI models from generating content that is factually incorrect. These forms of hallucinations are also known as hallucinations.

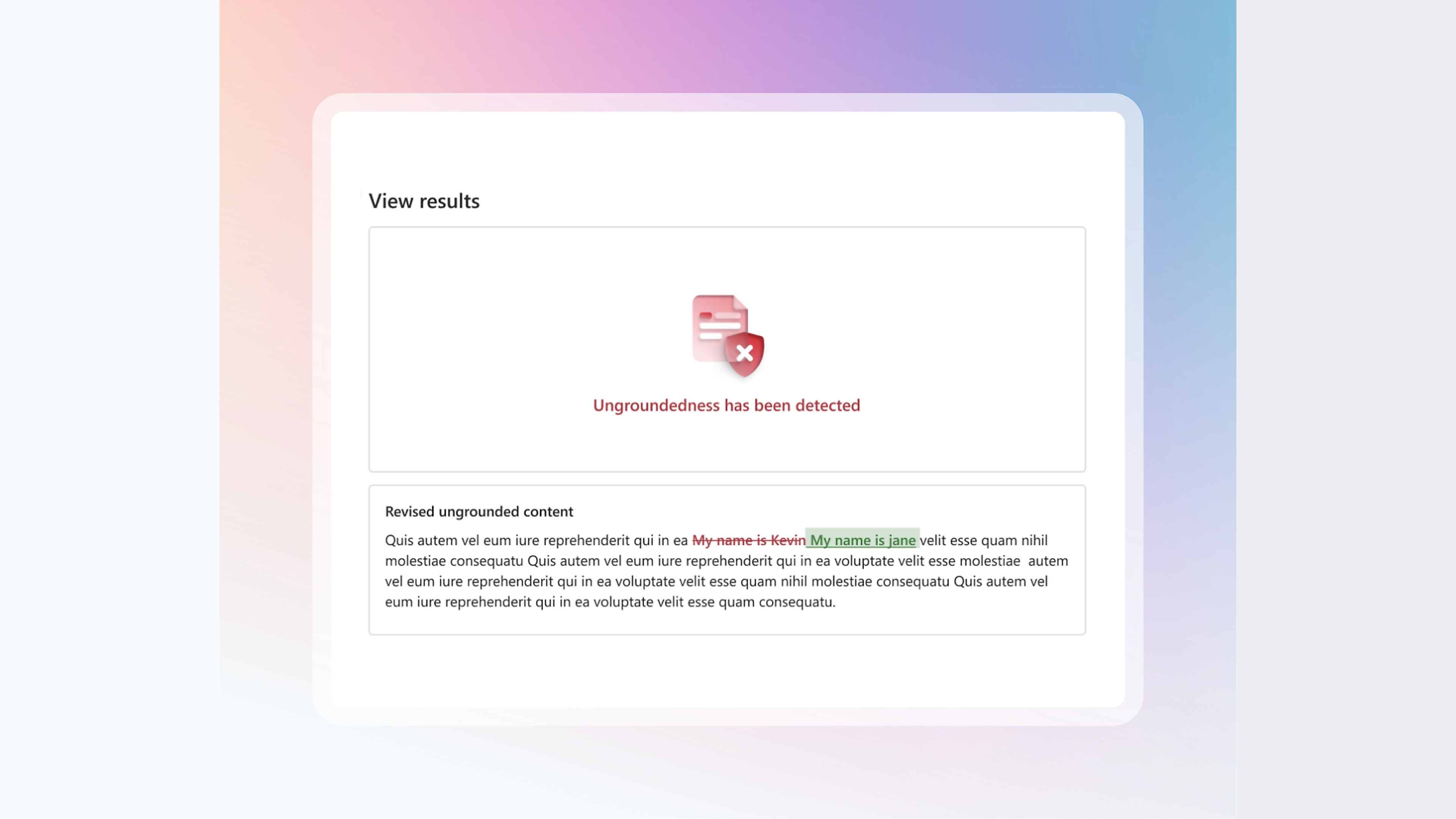

The new Correction feature builds on Microsoft’s existing “groundedness detection,” which essentially cross-references AI text with a supporting document input by the user. The tool will be available as part of Microsoft’s Azure AI Safety API and can be used with any text-generating AI model, such as OpenAI’s GPT-4o and Meta’s Llama.

The service flags anything that could be an error and then fact-checks it by comparing the text to a source of truth via a source document (i.e. uploaded transcripts). This means that users can tell AI what to consider fact in the form of source documents.

An emergency measure

Experts warn that while the current state of affairs may be useful, it does not address the root cause of hallucinations. AI does not actually ‘know’ anything, it only predicts what will happen based on the examples it has been trained on.

“It is critical that our customers are able to understand and take action on unsubstantiated content and hallucinations, especially as the demand for reliability and accuracy of AI-generated content continues to increase,” Microsoft said in its blog post.

“This groundbreaking capability builds on our existing Groundedness Detection capability and enables Azure AI Content Safety to identify and correct hallucinations in real time, before they impact users of generative AI applications.”

The launch, now available in preview, is part of Microsoft’s broader efforts to make AI more trustworthy. Generative AI has struggled to gain public trust thus far, with deepfakes and misinformation tarnishing its image, so updated efforts to make the service more secure will be welcomed.

Also part of the updates is ‘Evaluations’, a proactive risk assessment tool, as well as confidential inference. This ensures that sensitive information remains secure and private during the inference process – that is, when the model makes decisions and predictions based on new data.

Microsoft and other tech giants have invested heavily in AI technologies and infrastructure and will continue to do so, recently announcing a new $30 billion investment.