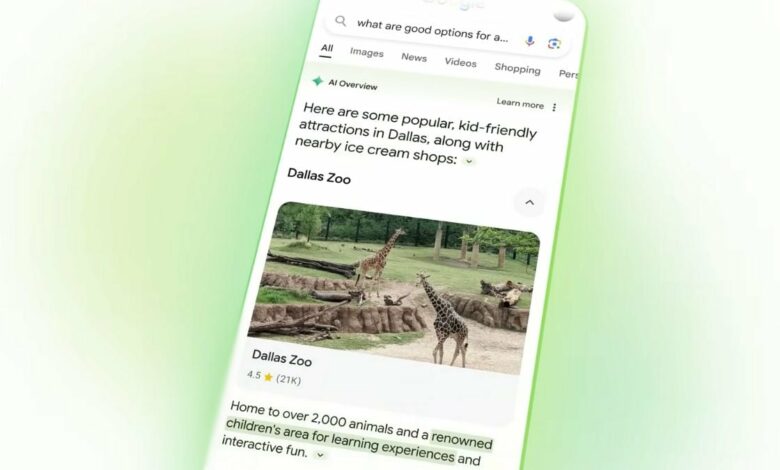

Google AI Overviews will soon allow you to dive deeper into AI Overviews

Google’s AI Summaries will reportedly be updated with feature improvements that will allow users to dive deeper into a topic. According to the report, Google is experimenting with a new feature for its artificial intelligence (AI) search summary feature. Allows users to highlight a portion of the text displayed in AI summaries and perform a secondary AI search. This feature is currently in development and it is unknown when it will be rolled out to all users. It was spotted in the latest beta version of the Google app.

How Google could expand its AI overviews feature

Android Authority spotted a new AI Summaries feature in beta 15.45.33 of the Google app that allows users to delve further into AI-generated summaries. The feature was discovered in collaboration with AssembleDebug, an account that posts new features spotted in various apps and the Android operating system.

AI summaries typically allow users to perform an AI search on most searches on Google Search. When users perform a search, AI Overviews finds relevant information by crawling various websites to provide a quick overview of the search.

The new feature allows users to select specific text in the AI summaries and view a different AI-powered summary, the release said. This means users can ask the AI to provide a deeper insight into the topic, based on previous answers. However, the report emphasizes that users cannot simply highlight any text to trigger a new AI Overviews query.

According to the description, it seems that the feature is only available when users select text that requires further explanation of the topic. Furthermore, it is reportedly not currently possible to perform a second or higher level of AI searches on the subject. So users can’t dive into the topic indefinitely by continuously selecting text from the previous AI summary.

Notably, the second level of AI summaries will appear below the first level of AI summaries, according to the publication. Because this feature is currently in development, it is unclear how long it might take before it is made available to the public.