Apple Vision Pro’s new operating system subtly expands the headset’s potential

I had surgery this summer and during my recovery I routinely used two gadgets: The Ray-Bans from Meta for easy calling and listening to music, and The Apple Vision Pro to escape into movies. For the latter, I used a developer build of VisionOS 2, which features features that are now available for anyone to use on the mixed-reality headset. Those who own one will appreciate a few subtle changes that make using it a little more interesting. I say “a little” because the Vision Pro, while advanced, is still a limited headset in what it can do.

The use of new gestures in VisionOS 2 is fantastic

Apple assumes you’ll do everything on the Vison Pro with your eyes and hands. And the built-in eye and hand tracking are so good, that’s mostly true. The latest OS also adds dashboard interfaces that appear directly above your hands, making them feel like pop-up dashboards. Meta has had this on its Quest headset for years, but Apple’s riff on the features feels remarkably fluid and responsive.

When I turn my hand up, a floating circle icon appears, and a tap opens the grid of apps. It’s faster and easier than pressing the Digital Crown button on the top of the Vision Pro, which is what I used to do.

Look, there’s my pop-up clock with volume and control widgets.

Turning my hand back over, a control center widget appears that I like even more. It replaces the strange floating dot at the top of the headset’s screen (and still appears occasionally). Remove it and I no longer have any strange interruptions to my field of vision. The widget also displays the time, like a clock. The Vision Pro’s “what time is it?” issues are instantly solved.

The widget also shows battery life, volume level, and Wi-Fi connection. Tapping brings up a submenu for other controls, like connecting to my Mac as a virtual display or checking notifications. Tapping, holding, and dragging adjusts the volume, saving me from having to reach up on the Digital Crown.

It’s so good and so simple that I want more. I want to be able to control everything in Vision Pro in this mode with simple sub-gestures, instead of having to dig through larger grids of apps and menus. It can happen — and should happen.

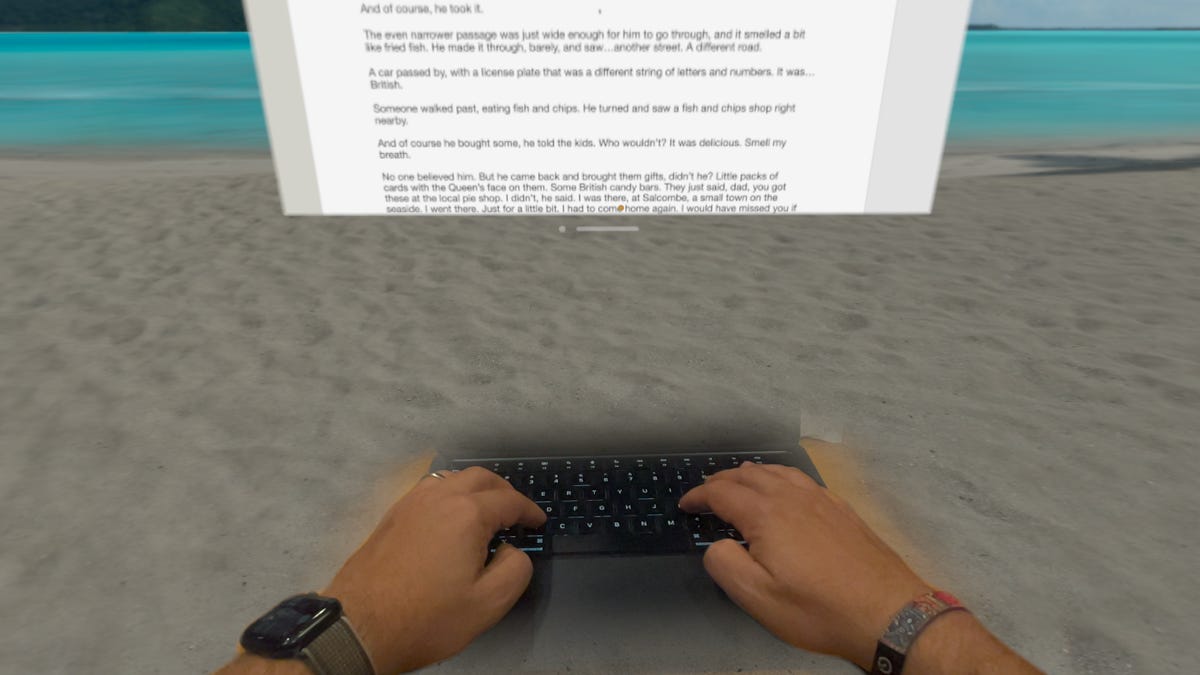

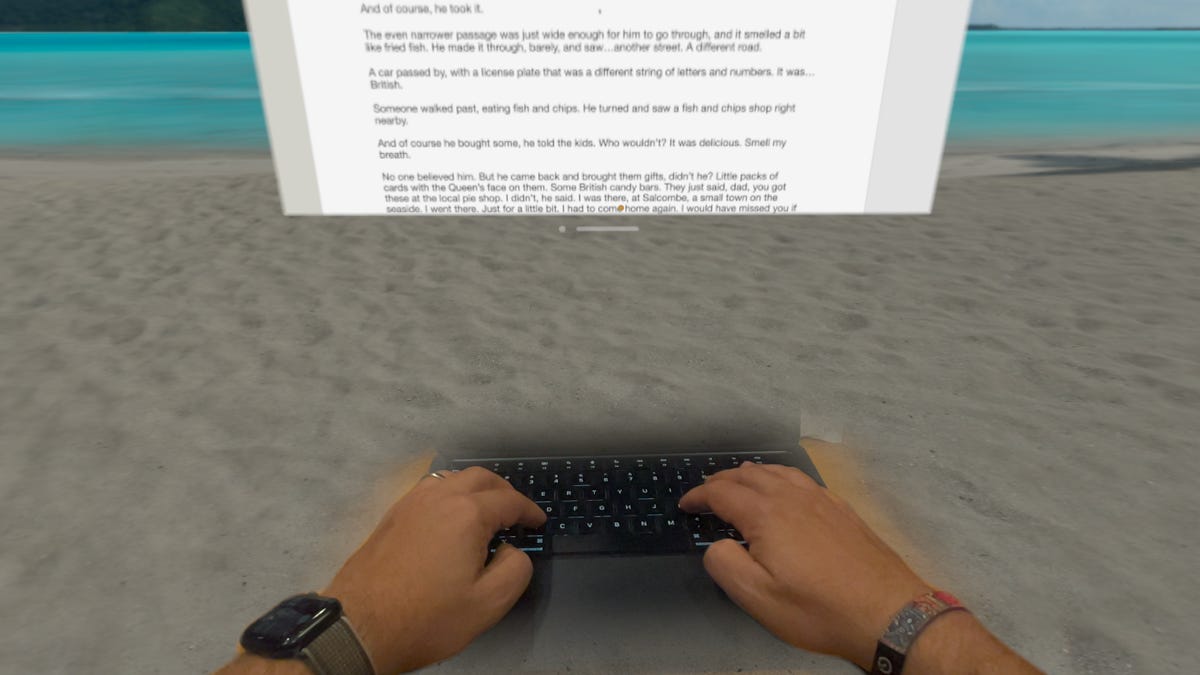

Now my keyboard can float on the beach.

Keyboards work when you’re in immersive environments

Meta first started by recognizing keyboards and making them appear in VR, and I loved it at the time. Apple’s headset now has automatic recognition of a Mac or Bluetooth keyboard in immersive environments by default; I can clearly see my hands and the keyboard while surrounded by more beautiful beaches than my cluttered office. I can write more easily in a quiet environment.

3D Photo Conversion: FOMO Delight

Sometimes I don’t shoot 3D video clips on the iPhone 15 Pro, and I find that my memories aren’t 3D-ready to view later on the Vision Pro. My entire library of photos and videos is also hampered by this. Apple has a brilliant AI feature in VisionOS 2 that all but negates the need for a new iPhone 16 with 3D photos: it simply converts 2D photos to 3D automatically.

The conversion is fast, and photos still display normally in 2D on your Mac, iPhone, and elsewhere. But in the Vision Pro, old photos look remarkably 3D. Old photos of my kids, trips I took a decade ago… they made me emotional. The depth conversion isn’t always perfect, but it’s often astonishing. It’s made browsing my photo library a lot more enjoyable.

This is my YouTube video floating over the beach: Safari videos can now be embedded in environments.

Safari is better for videos and WebXR

Apple is now rolling out WebXR immersive experiences without having to go into settings (finally), so you can launch a VR experience on the web without needing a dedicated Vision Pro app. Videos will also play in immersive environments, making Netflix, YouTube, and other app-free video services feel more like apps on Vision Pro. It’s something I’ve wanted from the start. Is it a game changer? No, but it’s welcome.

Meditation now follows your breathing

One little quirky bonus I noticed during an in-headset mindfulness meditation (something I like to do once a week) is that it recognizes my inhales and exhales and matches the animations to my breathing. Apple hasn’t pursued other health and fitness possibilities with its mixed-reality spatial computer (yet), but this little tweak makes me wonder how much more the Vision Pro could be tuned to be aware of movement, posture, and other health-related matters.

This curved Mac monitor in Vision Pro isn’t here yet, but it should arrive later this year.

I can’t wait to try out the giant curved Mac monitor

A feature coming later this year promises to expand the virtual Mac display you can enable in Vision Pro into a giant curved monitor that wraps around your entire field of vision. Meta already has similar technology for its headsets , but it’ll be great to try it with Apple’s beautiful micro OLED display. It’s still not the same as multiple virtual Mac monitors, but it does feel like a much larger canvas for whatever apps you want to keep open at once.

Still not known: what about the iPhone, Watch and Apple Intelligence?

I’ve been working quite a bit on my Mac in Vision Pro over the past six months, but I’m shocked that the iPhone, the device I always have with me, has no direct interface yet. I’d use the iPhone as a physical controller for the Vision Pro if I could, either to type things out quickly, faster than the awkward air-tapping on the headset I have to do now. Or to quickly share information back and forth, expanding a shared item into an expanded Vision Pro view. VisionOS 2 does have a way to AirPlay your phone’s screen directly to the Vision Pro in a pop-up window, but it’s not the same.

Apple already has hooks for Messages, AirDrop, and other iCloud-like things (as well as copy-and-paste between devices), but I’m waiting for more. And speaking of Apple devices, the Watch would make a perfect accessory, too. The Watch already recognizes wrist gestures, and has haptic feedback and a touchscreen. It could double as a fitness tracker with mixed-reality apps, which the competing Quest headset already does.

And what about Apple Intelligence? The generative AI features that Apple has been announcing since June haven’t yet made it to iPhone, iPad, or Mac, but the Vision Pro should also be on this list as a clear next recipient of an AI upgrade. I use Siri a lot on the Vision Pro to open apps and perform quick actions, and it makes a lot of sense for an enhanced assistant that is available to explore what else can be called upon directly in mixed reality. Sounds like that won’t happen until next year, although. Perhaps Apple will add connected support for the iPhone at the same time — and for Apple Watches as well.