Gemini Live is the best AI feature I’ve seen from Google so far

With ChatGPT rolling out Advanced Voice Mode to some users this month and Apple on the cusp of launching Apple Intelligence , Google has fired back with Gemini Live, a version of Gemini AI that lets you talk to your phone as if it were a real person. Gemini Live is currently only available to Gemini Advanced customers, as part of the $20 (£18.99, AU$30)-a-month AI Premium subscription, but should be available to all subscribers with a compatible phone, not just those with a shiny new Google Pixel 9, which the search giant just launched.

My first impression is that Gemini Live is actually quite impressive to hear in action. I can finally chat with my phone as if it were a real person, something I’ve wanted to do ever since voice assistants like Google Assistant, Siri, and Alexa became a thing. Unfortunately, over the last few years I’ve been reduced to using Siri and Alexa to set timers on my phone or play music, because there’s a limit to how useful they can be, and they usually direct me to a web page if I ask something too complicated. With Gemini Live, on the other hand, I can have a conversation about almost anything and get a meaningful answer. It understands my words and intentions on a whole new level. Ask Gemini how the US did at the recent Paris Olympics and it will respond with a real answer. Ask it to recommend a diet plan and it will give me some ideas based on what it knows about me.

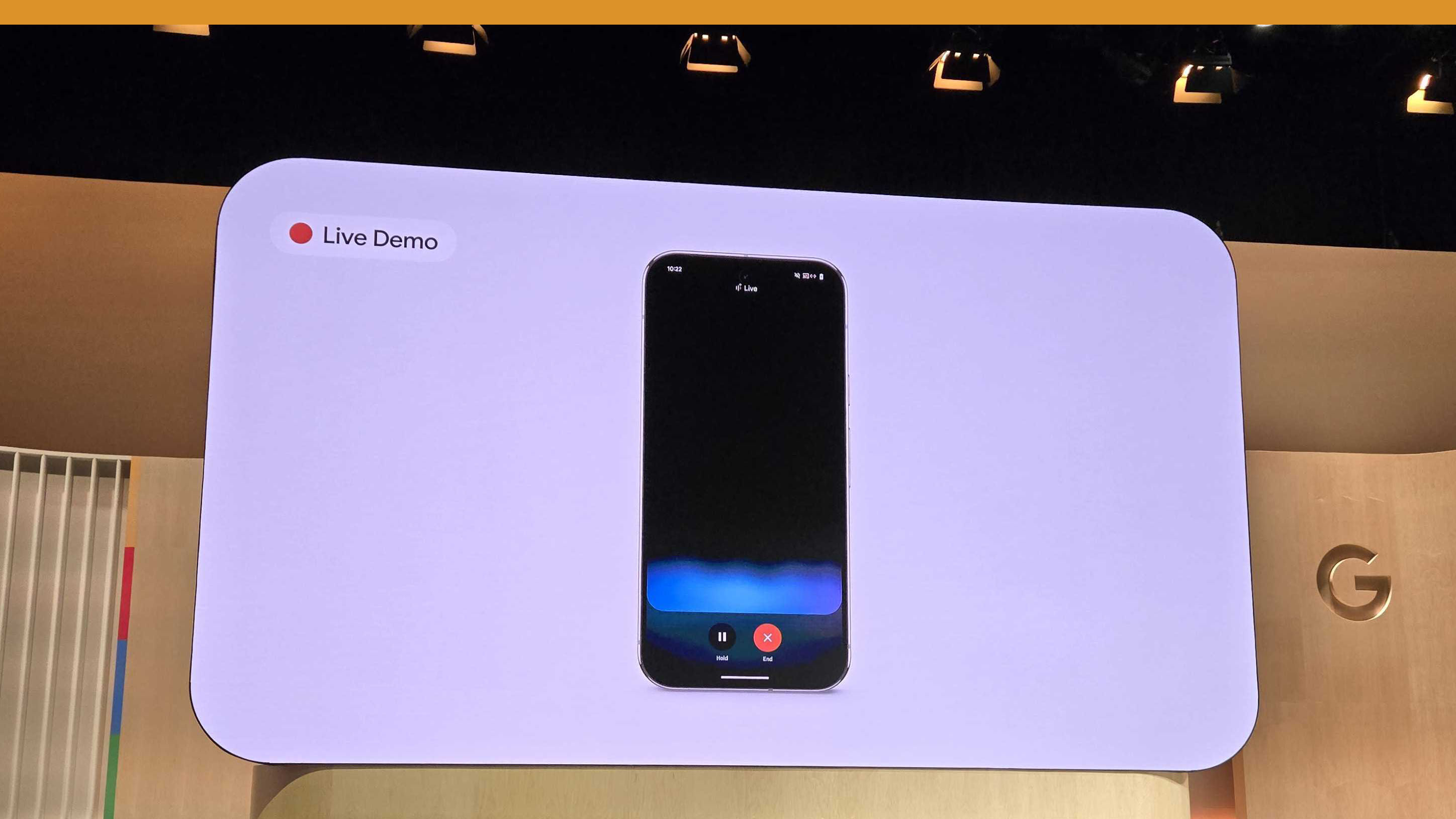

Sure, I could already talk to Gemini on an Android phone and ask him simple math questions, or ask him about the weather, but the new Gemini Live is a whole new beast. With Gemini Live, I can have a real conversation about complex topics, ask him to brainstorm ideas, or ask him for advice. To make the conversation really realistic, I can also interrupt his responses, so if I feel like the answer I’m getting is just taking too long, I can interrupt Gemini and ask him something else. It feels a little rude, but machines don’t have feelings, right? I also don’t have to press anywhere on the screen to talk to Gemini, so it’s a completely hands-free experience, meaning I can use him while doing other tasks.

Gemini Live is also multi-modal, so it can “look” at images or videos on your phone and answer questions about them. This can be especially useful if I want to take a photo of something and then ask Gemini Live a question about it. It will intelligently extract information from the photos and use that in its answer. Despite a few hiccups in the live demo at the recent Made for Google event, this is really useful.

Google is still adding features to Gemini (and will presumably keep adding them forever), and “in the coming weeks” it will add extensions that will start to make it really useful, allowing Gemini to integrate with various apps, like Calendar and Gmail. So, you’ll be able to say things like, “Find the specs James emailed me a few weeks ago,” and it will do just that. That feature could be the sleeping hit for Gemini Live.

All in all, Gemini Live is the best use of AI I’ve seen from Google yet. Google has invested a lot of time and money into integrating AI into its search pages with AI Overview, which is not something I want. I don’t want AI to take over my searches and get in the way of my searches with useless answers when all I want is to be redirected to a web page. AI can still get its facts wrong, and Gemini is no different. I just want AI to help me with my life, and while there’s a lot more to come that will take Gemini Live to a whole new level, for now I can say goodbye to Google Assistant and have an actual conversation with my phone, and that’s pretty awesome.