Google’s AI overviews are often so confident that I have lost all the confidence in it

- Advertisement -

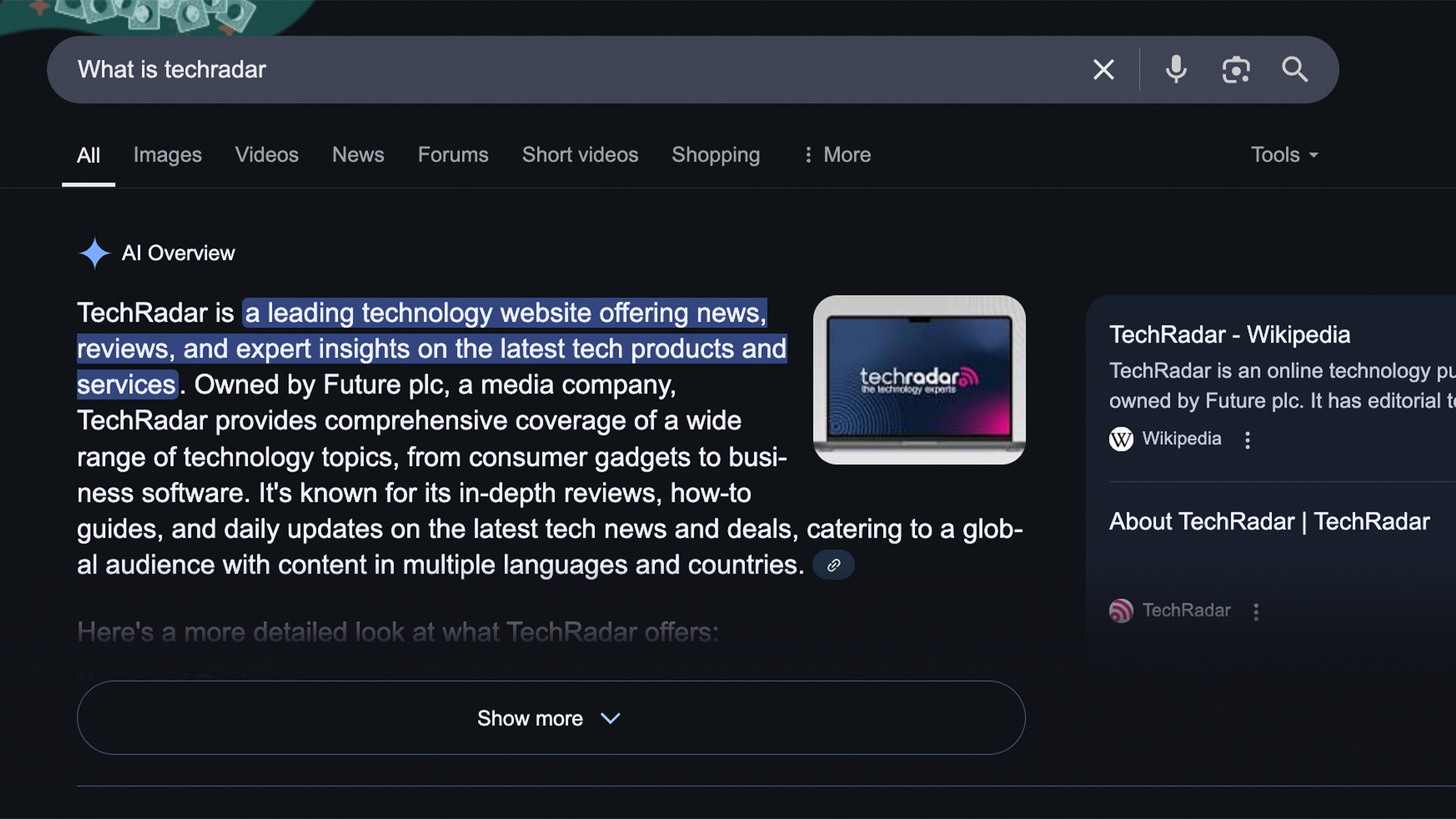

Have you googled something lately to only meet a cute small diamond logo over a few magically appearing words? Google’s AI overview combines the language models of Google Gemini (which generate the answers) with the collection of the collection, which collect the relevant information.

In theory it has made an incredible product, the Google search engine, even easier and faster to use.

However, because creating these summaries is a two -step process, problems can arise when there is a decoupling between the collection and language generation.

Although the collected information can be accurate, the AI can make incorrect jumps and draw strange conclusions when generating the summary.

This has led to some famous Gaffels, such as when it became the smiling stock of the internet in mid -2024 for recommending glue as a way to ensure that cheese would not slide your home -made pizza. And we loved the time it described with scissors as “a cardio exercise that can improve your heart rate and require concentration and focus”.

He led Liz Reid, head of Google Search, to publish an article with the title About last weekThe mention of these examples “emphasized some specific areas that we had to improve”. More than that, she blamed “nonsensical questions” and “satirical content”.

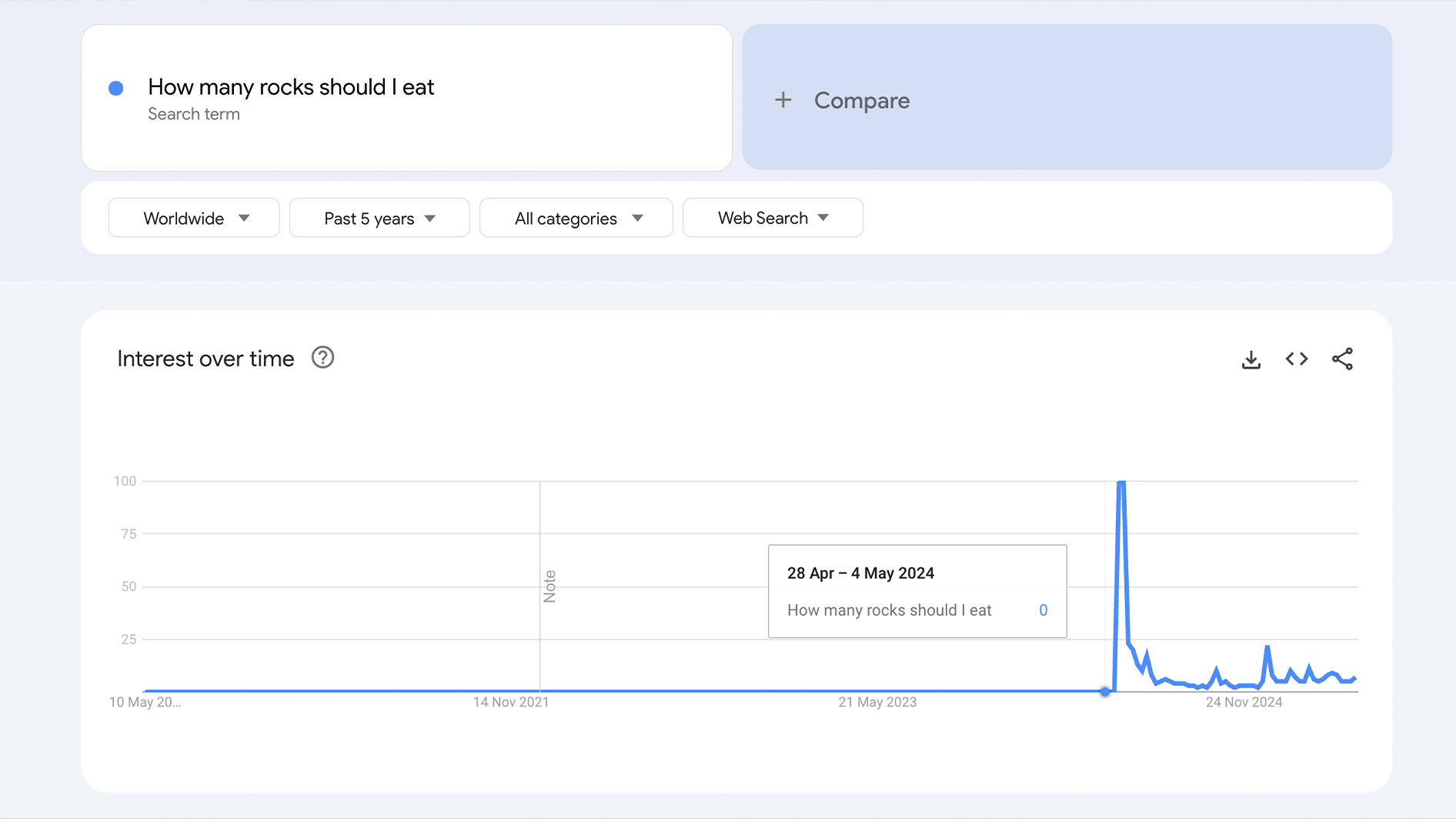

She was at least partially right. Some problematic questions were purely emphasized in the interest of AI to make it look stupid. As you can see below, the question “how many rocks should I eat?” Was not a usual search before the introduction of AI overviews, and that is no longer since then.

Almost a year after the Pizza-Tly fiasco, however, people are still misleading by the AI overviews of Google to manufacture information or “hallucinating” euphemism for AI-greuken.

Many misleading questions seem to be ignored from writing, but last month it was that was Reported by Engadget That the AI overviews would form statements for pretending idiomas such as “you can’t marry pizza” or “Never rub in the laptop of a Basset Hound”.

So, Ai is often wrong if you deliberately mislead it. Big Deal. But now that is are used by billions and inclusive Medical advice from the crowd-sourcedWhat happens when a real question ensures that it hallucinates?

Although AI works beautifully or everyone who uses it investigates where it has received his information, many people – if not most people – will not do that.

And that is the most important problem. As a writer, his overviews are a bit annoying inherent because I want to read content written by people. But even, even putting my pro-human bias aside, AI becomes seriously problematic if it is so easy to be unreliable. And it has become demonstrably dangerous now that it is in principle omnipresent during the search, and a certain part of the users will take his information on nominal value.

I mean, searching for years has trained all of us to trust the results at the top of the page.

Wait … is that true?

Like many people, I can sometimes struggle with change. I didn’t like it when LeBron went to the Lakers and I stayed for far too long with an MP3 player over an iPod.

However, since it is now the first that I usually see on Google, the AI overviews of Google are a bit harder to ignore.

I tried to use it like Wikipedia – possibly unreliable, but good to remind me of forgotten info or for learning about the basis of a subject that will not cause me agita if it is not 100% accurate.

But even with apparently simple questions it can fail spectacularly. As an example I was to a movie and this guy the other week Real Leek on Lin-Manuel Miranda (maker of the musical Hamilton), so I googled if he had brothers.

The AI overview told me that “yes, Lin-Manuel Miranda has two younger brothers named Sebastián and Francisco.”

For a few minutes I thought I was a genius in recognizing people … until a little further research demonstrated that Sebastián and Francisco are actually two children of Miranda.

I wanted to give it the advantage of the doubt, I thought it would not have a problem with mentioning quotes from Star Wars To help me think about a head.

Fortunately it gave me exactly what I needed. “Hello there!” And “it’s a fall!”, And it even quoted “No, I am your father” in contrast to the too common “Luke, I am your father”.

Together with these legitimate quotes, however, it claimed that Anakin had said: “If I go, I go with a bang” before his transformation in Darth Vader.

I was shocked about how it could be so wrong … And then I started guessing myself. I’m going to give myself gas by thinking that I have to be mistaken. I was not sure that I had checked the existence of the quote in a triple And Share it with the office – where it was quickly (and correctly) rejected as a different attack by AI Lunacy.

This little bit of self -doubt, about something as stupid as Star Wars scared me. What if I had no knowledge of a subject I asked about?

This study SE -Rang order actually shows the AI overviews of Google that subjects for finances, politics, health and rights to be careful on topics (or responds carefully. This means Google know That it is AI is not yet the task of more serious questions.

But what happens if Google thinks it has improved to the point that it is possible?

It’s the technology … but also how we use it

If everyone who uses Google can be trusted to check the AI results or click on the source links of the overview, the inaccuracies would not be a problem.

But as long as there is an easier option – a more friction -free path – people tend to take it.

Despite the fact that they have more information within reach than at any previous time in human history, in many countries our Literacy and math skills are declining. An example of this showed a 2022 study that alone 48.5% of Americans report at least read An Book in the past 12 months.

It is not the technology itself that is the problem. As eloquent argued by assistant professor Grant BlashkiHow we use the technology (and indeed, how we are controlled to use it) is where problems arise.

For example one Observational study by researchers from Canadian McGill University Discovered that regular use of GPS can lead to deteriorated spatial memory – and an inability to navigate yourself. I can’t be the only one used Google Maps To get somewhere and had no idea how you can get back.

Neuroscience has clearly shown that struggle is good For the brain. Cognitive loading theory states that your brain must think About things to learn. It is difficult to imagine that you are struggling too much if you are looking for a question, read the AI summary and then call it a day.

Make the choice to think

I don’t connect to never use GPS again, but given the AI overviews of Google are regularly unreliable, I would do that Get rid of AI overviews If I could. However, there is unfortunately no such method for now.

Even hacks such as adding a swear word to your question no longer work. (And while the use of the F-word still seems to work most of the time, it also ensures stranger and more, UH, ‘adult-oriented’ search results that you probably don’t look for.)

Of course I still use Google – because it’s Google. It is not going to reverse his AI ambitions quickly, and although I could wish to recover the option to unsubscribe from AI overviews, it might be better the devil you know.

At the moment, the only real defense against AI is wrong information to make a joint effort to not use it. Let the notes make your work meetings or come up with a number of pick-up lines, but when it comes to using it as a source of information, I will browse and a high-quality man-authorized (or at least checked) article looking for the best results-as I have done almost my entire existence.

I said before that in a day these AI tools could really become a reliable source of information. They may even be smart enough to accept politics. But today is not that day.

In fact, as Reported on 5 May by the New York TimesAs the AI tools of Google and Chatgpt become more powerful, they also become increasingly unreliable – so I am not sure if I will ever trust that they summarize the policy of a political candidate.

When testing the hallucination percentage of these ‘reasoning systems’, the highest registered hallucination percentage was no less than 79%. AMR Awadalla, the Chief Executive of Vectara – an AI agent and assistant platform for companies – said it bluntly: “Despite our efforts, they will always hallucinate.”

Maybe you like it too …

- Advertisement -