I tried out Snap’s new standalone AR glasses that do mixed reality, AI, and outdoor work

In a New York hotel on a warm September day, I stood on a terrace, leaned over and petted a cute pet that wasn’t there. Peridot’s virtual friend hopped around and followed me. It’s a lot like other virtual pets I’ve tried on phones and even headsets like the Meta Quest 3. This time, I saw it projected through clear lenses that automatically dimmed like sunglasses to ensure the virtual experience was clearly visible in bright daylight. All I was wearing was this pair of thick but self-contained glasses: Snap’s new AR Spectacles. Are these the future of something I might one day wear? And if so, how long before that happens?

I saw a lot of viral videos earlier this year of people who The Apple Vision Pro headset outdoors, for skiing, skating, in the park… wherever. The Vision Pro and Quest 3 headsets are not designed for daily outdoor useBut Snap’s AR Spectacles are. Announced at the company’s developer conference in Los Angeles, they’re the latest iteration of Spectacles that Snap has been making for years. Snap first created standalone AR glasses in 2021; I tried them out in my backyard during the pandemic. The new Spectacles are bigger, but also more powerful. They have hand gestures like the Vision Pro and Quest 3, and a full-on Snap OS that can run a browser, launch multiple apps, and connect to nearby phones.

These Snap Glasses aren’t even made for regular people. They’re developer hardware that’s being offered as a subscription, for $99 a month as part of Snap’s developer kit. That’s a sign that Snap knows the world isn’t ready for AR glasses, and neither is consumer tech. Snap is getting a foot in the door, a little ahead of its competitors.

Check this out: I wore Snap’s new standalone AR glasses and Snap OS

Companies like Meta, and likely Google and Apple as well, are trying to develop smaller AR glasses that can overcome the larger limitations of mixed reality VR glasses. I have seen attempts to make these types of glasses, but they usually need to be connected to phones, computers, or other external processors in order to work and keep the glasses small.

Snap’s AR Spectacles put all the processing and battery power directly on the glasses — nothing else is needed. That means they’re easy to wear without any extra wires, but the Spectacles in their current form are bulky and far weirder than any glasses I’d ever put on my face. The frames are thick, and the lenses still have rainbow-colored spots in the centers where waveguides reflect virtual images projected by side-mounted LCOS mini projectors.

The new Spectacles have thick arms that house the processors, projectors and batteries.

Battery life is also extremely limited. At around 45 minutes, these are far from suitable for all-day use. Though, like the 2021 version of Snap’s AR Glasses, these are still designed for developers. According to Snap CTO and co-founder Bobby Murphy, these are exploratory devices to see how Snap’s existing AR Lenses on the Snapchat phone app could make a useful leap into glasses form.

This is the exact same approach Snap took in 2021, but with a boost in processing power. On board are two Qualcomm processors (I don’t know which ones) that delivered fairly sharp, if occasionally stuttery, graphics. The glasses only have a 46-degree viewing angle, which is fine for AR glasses but far less than VR headsets. It felt like I was watching mixed reality through a tall, narrow window the size of a large phone screen.

One big difference between my 2021 demo and now is the prescription inserts. Last time I had to wear contacts, but snap-on lenses similar to what Meta and Apple already offer work for these Spectacles. Unfortunately, Snap didn’t have my prescription during the demo, so my AR morning was a bit blurry. It was good enough to see experiences.

An image from Snap of how group shared experiences with hand tracking might work. In glasses, the field of view is narrower than my own vision.

Hand tracking, but no eye tracking

Snap’s Spectacles use external cameras to track the world around me, but add hand tracking like the Apple Vision Pro and Meta Quest headsets. I was able to tap virtual buttons, pet virtual creatures, paint in the air, or pinch things from a distance. Snap OS has an app dashboard that floats in the air, plus virtual buttons that appear above my hand when I turn it over.

The Spectacles don’t have eye tracking, so selecting objects takes a bit more effort than Apple’s glance-to-select Vision Pro. Phones can also connect to the glasses, offering another way to interact.

An extension for phones

One thing that’s different about Snap’s approach compared to Meta or even Apple at this point is that it’s connected to phones. I tried several demos where I used a nearby phone to control AR experiences on the glasses. I used a phone as a remote to fly an AR helicopter around the room, using on-screen buttons for control. I also held a phone like a golf club and teed off to take a swing at an AR golf course that looked like it was half-teleporting into the hotel room I was in.

Snap’s Snapchat phone app will control the glasses, allowing anyone to connect and view the AR experiences I see on the glasses. The long-term goal, according to Snap, is to allow phones that use Snapchat to interact with the glasses.

“We absolutely believe there’s a lot of room to explore the connection between phones and Spectacles,” Snap’s Murphy told me.

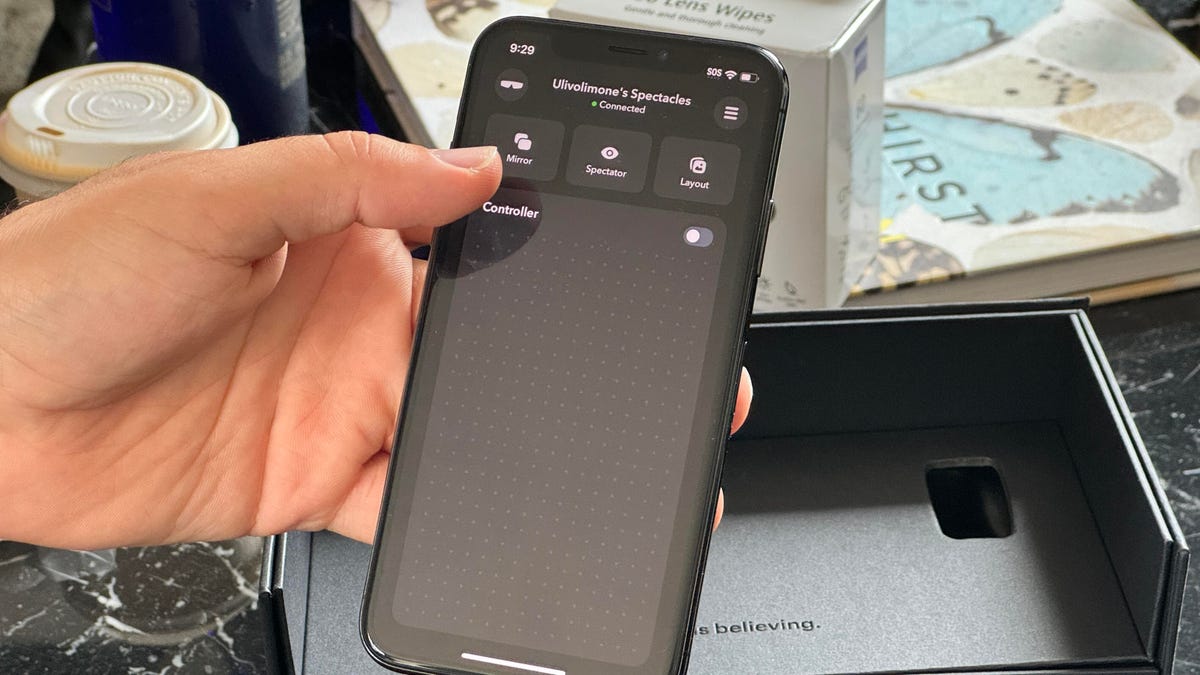

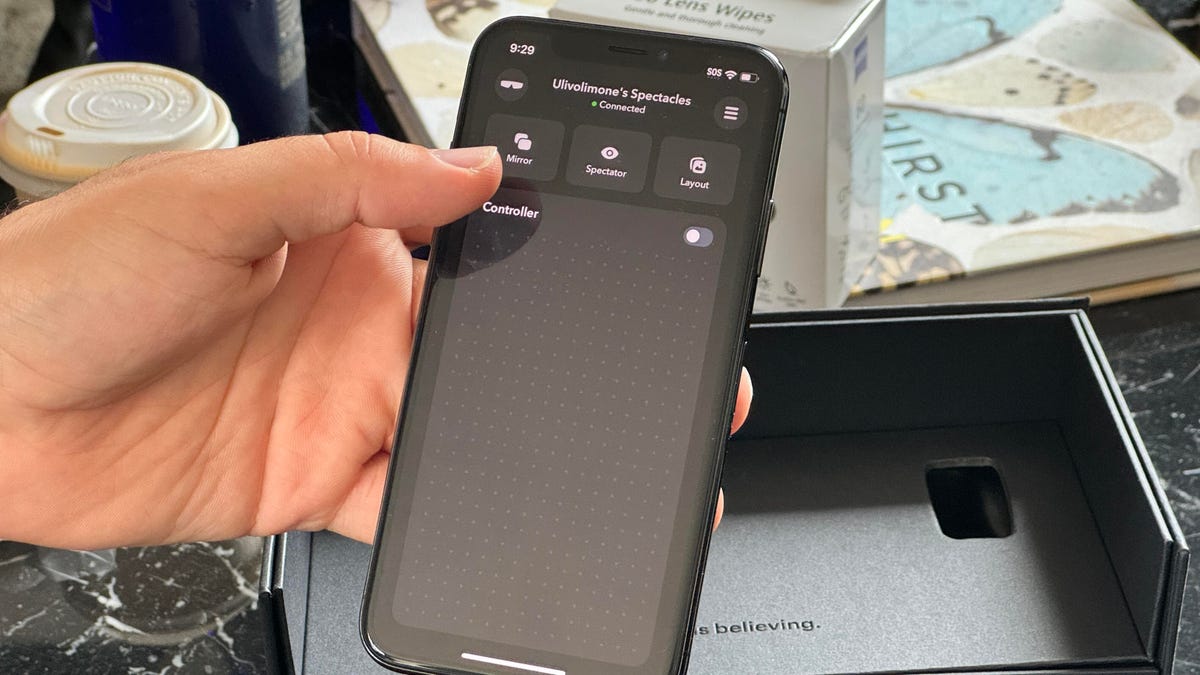

Snap’s phone app acts as an optional control panel for the glasses and can also function as a controller.

In the short term, the glasses are only made to work with other AR Spectacles wearers, and probably for good reason: Phone operating systems don’t yet play nice with AR glasses. Both Apple and Google haven’t made any moves to make headsets and glasses feel truly integrated, and until they do, other companies face a compatibility bottleneck unless they use special phone apps or, like Xrealbuild their own custom phone-like hardware. Even then, connectivity isn’t ideal.

Get it already has a wide range of AR tools on his Snapchat phone app, from world-scanning collaborative experiences to location specific ARSnap’s AR capabilities have improved since 2021, and these new glasses could take advantage of that.

Working with groups

One thing I tried was collaborative painting, using my fingers to draw in the air while one of Snap’s team members drew next to me, wearing another pair of glasses. The glasses can recognize another wearer nearby and share an experience, or even work together to scan a room using the four built-in cameras in a mixed reality mesh.

Connecting in the hotel room wasn’t always instant, but that glasses-to-glasses collaboration is a big part of the pitch here. Snap wants to take group multiplayer experiences to outdoor spaces, perhaps even museums or art shows, to test how well they could work for live, immersive activations. A Lego building experience was simple, but it showed potential when a group of people could work together to create a site-specific sculpture.

Designed for outdoor wear, these glasses feature auto-dimming lenses similar to the technology found on Magic Leap 2The Magic Leap 2 has a large external clip-on processor.

Some of the early partners Snap is working with include Niantic, best known for Pokémon Go and a company that also shapes the future of Outdoor AR glasses for years. Another partner, ILM Immersive, has already Star Wars And Marvel Experiences on VR and mixed reality headsets.

Glasses with prescription lenses in them. I tried to match my own vision as best as possible for the demo.

Are these opportunities for more AI wearables and AI AR?

Snap is also partnering with OpenAI, a move that hardly seems surprising for 2024. This spring has seen a wave of AI wearables with cameras, including The Ray-Bans from Meta and the Human AI pinattempted to show the promise of “multimodal” generative AI that could access cameras and audio inputs together for assistance or for generative AI creative apps. Mixed reality headsets like the Meta Quest 3 and Apple Vision Pro have yet to take advantage of camera-enabled AI because camera permissions are more locked down for developers.

Snap is broadening camera access: OpenAI connections will be able to use the Glasses’ cameras and microphones for generative AI lens apps similar to what’s now available in Snapchat’s phone app. (Snap also enables camera access for AR apps, but online access is locked for those apps, while online camera access is open for OpenAI connections.)

“Where people want to use third-party services, we’ll be more cautious and controlled and potentially work with developers or third-party service providers to build these types of protections,” Murphy said. On the Snapchat phone app, there are already generative AI tools who use the camera. We can finally see that some of them are now also switching to glasses.

I tried out generating some 3D emojis using Snap’s generative AI, and navigated through an educational Solar System Lens app that took my requests with my voice. I noticed that responses during my demos were sluggish and the glasses didn’t always understand what I was saying (though that could have been the hotel room connection or something else). Snap opening up camera access to AI-infused AR feels like a step up from where Meta and Apple currently stand. That could change quickly, though: Meta is expected to debut new AI and AR updates soon, while Apple Apple Intelligence Features on Vision Pro next year.