I used VEO 3 to make the first YouTube video again, and the results are almost too good

- Advertisement -

We all know the story of the First YouTube videoA granular clip of 19 seconds from co-founder Jaed Karim in the zoo, which stated on the elephants behind him. That video was a crucial moment in the digital space, and in some respects it is a reflection, or at least an inverted mirror image, today while we digest the arrival of VEO 3.

Part of Google Gemini, Veo 3 was unveiled at Google I/O 2025 And is the first generative video platform that, with a single prompt, can generate a video with synchronized dialogue, sound effects and background noises. Most of these 8 -second clips come within 5 minutes after you enter the prompt.

I have been Play with Veo 3 for a few days, And for my last challenge I tried to go back to the start of the social video and that YouTube “Me in the zoo” moment. In particular, I wondered if VEO 3 could recreate that video.

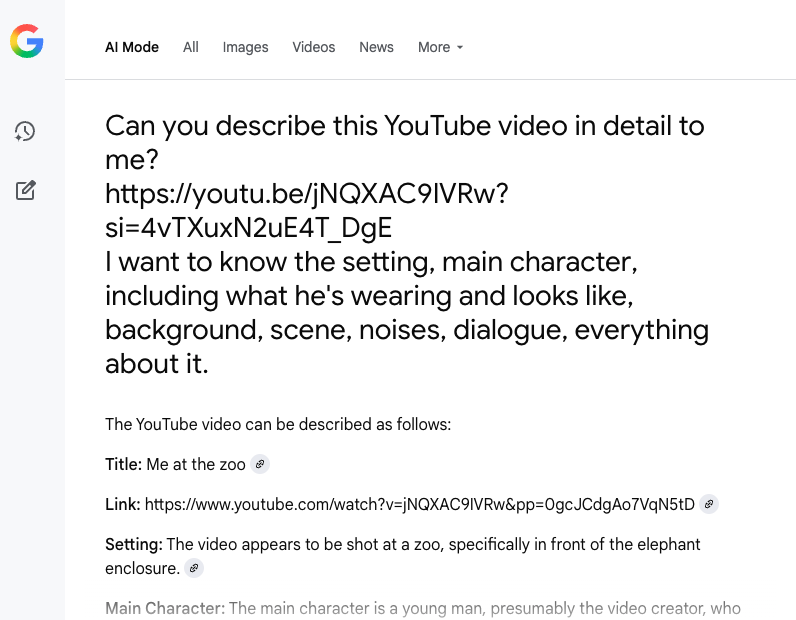

As I wrote, the key to a good VEO 3 outcome is the prompt. Without detail and structure, VEO 3 tends to make the choices for you and you usually do not end with what you want. For this experiment I wondered how I could possibly describe all the details that I wanted to deduce from that short video and deliver them to VEO 3 in the form of a prompt. So of course I turned to a different AI.

Google Gemini 2.5 Pro is currently unable to analyze a URL, but Google AI modeThe brand new form of searching that quickly spreads over the US is.

Here is the prompt that I have dropped in Google’s AI mode:

Google AI mode returned almost immediately with a detailed description that I took and fell into the Gemini Veo 3 -Prompt field.

I have done some processing, usually removing sentences such as “The video appears …” and the final analysis at the end, but otherwise I left most of it and added this to the top of the prompt:

“Let’s make a video based on these details. The output must be a ratio of 4: 3 and look as if it was recorded on 8mm video tape.”

It took a while before VEO 3 generated the video (I think the service is now being hammered), and because it only creates chunks for 8 seconds at the same time, it was incomplete, so that the dialogue was cut in the meaning of the sentence.

Yet the result is impressive. I would not say that the main character looks like something like Karim. To be honest, for example, the prompt does not describe Karim’s haircut, the shape of his face or his deep eyes. The description of Google’s AI mode of his outfit was probably insufficient. I am sure it would have done better if I had fed a screenshot of the original video.

Note for yourself: you can never offer enough details in a generative prompt.

8 seconds at a time

The VEO 3 -Videogesyo is more beautiful than that Karim visited, and the elephants are much further away, although they are moving there.

VEO 3 got the film quality well and got a nice appearance from 2005, but not the 4: 3 image ratio. It also added archaic and unnecessary labels to the top that fortunately disappear quickly. I now realize that I should have removed the bit “title” from my prompt.

The audio is very good. Dialogue synchronizes well with my protagonist and, if you listen carefully, you also hear the background noises.

The biggest problem is that this was only half of the short YouTube video. I wanted a complete recreation, so I decided to go back with a much shorter prompt:

Continue with the same video and add it to look back at the elephants and then look at the camera as he says this dialogue:

“Fronts and that’s cool.” “And that is almost all that there is to say.”

VEO 3 met the setting and the main character, but lost part of the plot and dropped the Old-school grainy video of the first generated clip. This means that when I present them together (like me above), we lose considerable continuity. It is like a time jump from a film crew, where they suddenly got a much better camera.

I am also a bit frustrated that all my VEO 3 videos have nonsensical captions. I have to remember to ask VEO 3 to remove them, to hide or place them outside the video frame.

I think of how difficult it was probably for Karim to film, edit and upload that first short video and how I have just made the same clip without the need for people, lighting, microphones, cameras or elephants. I didn’t have to transfer images of tape or even from an iPhone. I just put it out of an algorithm. We really stepped through the viewing glass, my friends.

I learned another thing through this project. As a Google AI Pro member I have two VEO 3 -Videogerations per day. That means I can do this again tomorrow. Let me know in the comments what you would like to make.

Maybe you like it too

- Advertisement -