I’m not yet impressed with AI on phones. What it takes to change your mind

If phone manufacturers like Samsung, Google and Apple are to be believed, AI will change the way we use our mobile devices. The question is: when?

This year saw a wave of AI-powered features arrive on our phones, from tools for rewriting messages to translating texts without having to leave your messaging app. Generative AI lets you erase objects from photos with a few quick swipes, or generate polished images from a simple command or rough drawing.

While these updates arguably bring added convenience to your phone, they don’t feel as groundbreaking as the tech giants would have you believe. The first phase of AI-focused phone features are designed for very specific use cases – so specific, in fact, that I often forget to use them. The new capabilities that seem most promising, like Google’s Circle to Search and Apple’s Visual Intelligence, require users to think about navigating their phones differently, which presents its own challenges.

It’s fair to say that tech companies have made it clear that this is the beginning of a multi-year evolution of mobile software. Getting this right is critical as it is believed that generative AI will shape the future of the internet and how we access information. Generative AI adoption in the US is said to be outpacing PC and Internet adoption, according to a September economic research report Federal Reserve Bank of St. Louis. By not integrating generative AI into their devices, tech companies risk being left behind, just like those that do missed about the shift to smartphones early 2000s.

So far we’ve seen hints about where the future of smartphone software will go, with new ideas emerging such as a phone interface that doesn’t rely heavily on apps and AI agents that can act on your behalf. For now those are just those ideas, but I hope that in 2025 there will be steps that will push mobile phones in that direction.

Read more: Why Google’s new Android vice president says ‘People don’t want to hear about AI’

AI functions in 2024 will be trivial and non-essential

Writing tools came in the first wave of Apple Intelligence features.

Generative AI, or AI that creates content or responses based on prompts, captured the world’s attention in 2023, thanks in large part to ChatGPT. But 2024 was the year that phone makers started embracing the technology in earnest. Samsung began rolling out Galaxy AI in January, while Apple unveiled Apple Intelligence in June months ahead of its October rollout. Google has sporadically announced AI improvements throughout 2024, from Gemini Live and Gemini’s ability to understand what’s on your phone’s screen at Google I/O in May to the new image generation tools on the Pixel 9 family in August.

Many of these early features are intended to solve problems that I’m not sure need solving. For example, I rarely find myself in a situation where I have to rewrite a text message to make it sound more professional or friendly. Most of the people I text are close friends or family members, so I usually don’t think much about the wording or tone. On the rare occasions when I text a work-related contact, the conversation is usually just a quick reminder of an upcoming meeting or event.

Other new AI features are fun and impressive, but don’t prove useful in the long term. I’m thinking of Samsung’s Portrait Studio, launched on the Galaxy Z Fold 6 and Z Flip 6. It uses AI to rearrange photos of people into different art styles, like watercolor or cartoon.

When I got my hands on the Galaxy Z Fold 6 in July, I had so much fun playing with different selfies and images from friends to see how Samsung would reimagine us with a new look. But the novelty wore off quickly. I haven’t touched that feature since, even when I revisited the Z Fold 6 three months later.

Samsung’s Portrait Studio tool

I feel the same way about other image generation apps and features, like the Pixel 9’s Pixel Studio, which allows you to create an image based on a prompt, and the Sketch-to-image tool from Samsung to turn rough sketches into detailed images. I have to admit, there’s a certain joy in playing with these creative tools and seeing what they do. But months later, these characteristics still haven’t found a place in my daily life.

Apple also just launched a preview of its own image creation app, called Image Playground, as part of the iOS 18.2 developer beta. I haven’t spent enough time with it yet to get an impression, but I can’t imagine thinking very differently about it.

Of course, my experience does not reflect everyone’s opinion. Some may find these tools highly valuable, such as those who struggle in social situations and need a little extra help figuring out how to frame a text message. Or creatives who need to quickly create images for a personal project. But that’s exactly my point; these features feel like they were designed for specific circumstances and not large-scale changes that advance the mobile experience.

The most promising features to date form the basis for the future

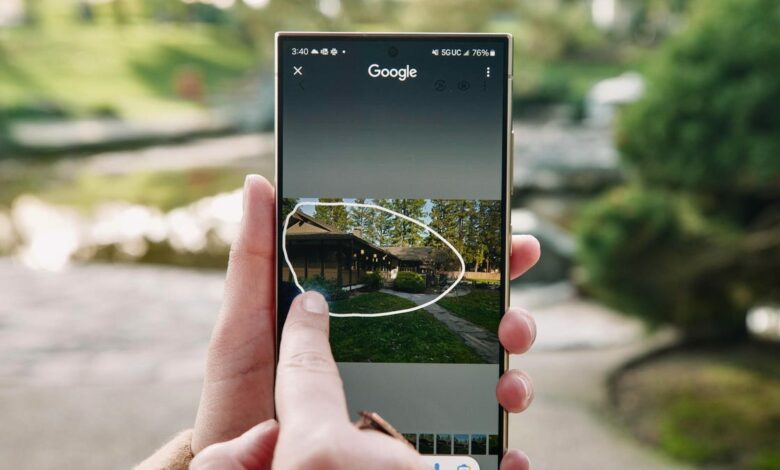

Apple’s Visual Intelligence tool lets you learn about the world around you by pointing your iPhone 16’s camera at an object or place.

While the vast majority of new AI features seem insignificant, there are a few that show real potential. Google’s Circle to Search, which lets you start a Google search for almost anything on your phone screen by circling it, is one such example. As does Apple’s Visual Intelligence mode for the iPhone 16, and the message and notification summaries in Apple Intelligence.

What sets these features apart from the other features mentioned above is that they feel more integrated at the system level rather than buried in specific apps. But more importantly, they’re designed to solve the bigger picture pain points surrounding the way we use our phones, even if they don’t fully deliver on that ambition yet.

Circle to Search and Visual Intelligence are two of the strongest examples of this. At first glance, they may seem very different: Circle to Search uses what’s on your phone’s screen, while Visual Intelligence requires you to use the iPhone 16’s camera to scan the world around you. But they both want to eliminate the middle step: opening an app, starting a Google search, or typing a prompt in ChatGPT to retrieve information. They’re both an indication that tech giants think there’s a better way to get things done on our phones.

Apple Intelligence’s message and notification summaries also stand out as an example of an AI feature that sometimes feels genuinely useful without requiring any additional user effort. Like Circle to Search and Visual Intelligence, it feels like a major change aimed at a common problem: managing the influx of information on our mobile devices.

The Samsung Galaxy S24 Ultra was one of the first phones to support Circle to Search.

But even these types of features are far from perfect and still have a long way to go. Apple’s summaries are sometimes adequate enough to give me the gist of a text string, but more often than not they miss crucial context. Visual Intelligence is still in preview as part of the iOS 18.2 developer beta, so I’m still getting a sense of its usefulness.

In addition, Visual Intelligence and Circle to Search suffer from the same conundrum: after years of being conditioned to tap, swipe and scroll, adopting a new way of working on our phones doesn’t come naturally. The reason these features are so interesting and promising is the same factor holding them back. Drawing a circle around something on the screen, or launching the iPhone’s camera instead of opening Google, isn’t instinctive yet, and who knows if or when that will happen.

What will become clear in 2024 is that AI still has to prove its purpose on our smartphones. The potential of AI is starting to take shape, especially when you consider more dramatic ideas about how our phones could change, like Google’s Project Astra demo from Google I/O, Qualcomm’s concept for apps that can take actions for you and Brain.ai’s vision of a phone that can generate its interface when necessary. There are already many efforts underway to make phones more intuitive, such as Google’s Gemini extensions, which allow the digital helper to work with other apps, and Apple’s upgraded Siri, which can understand personal context.

It’s impossible to say whether we’ll ever abandon apps in favor of AI, or rely on virtual agents to perform everyday tasks. But that’s not what I’m looking for in 2025. For now, I just want features that feel practical, useful, and innovative in a bigger way than what we’ve seen so far.