Is WAR the answer? How one advanced metric has come to dominate MVP voting

The summer duel between Aaron Judge and Bobby Witt Jr. for the AL MVP is reminiscent of a classic of the genre.

On one side, a ferocious slugger vying for the Triple Crown, leading the league in OPS and flirting with 60 homers. On the other, a transcendent five-tool star hitting 30 homers, stealing 30 bases, and playing exemplary defense in the middle of the diamond.

It would be easy, at first glance, to see a parallel to 2012, when Triple Crown winner Miguel Cabrera of the Tigers edged the Angels’ Mike Trout.

But the comparison comes with one problem:

Cabrera vs. Trout was a convenient proxy battle for old school vs. new school — the classic masher against the young king of WAR (Trout had significant leads in both versions of wins above replacement). Yet for most of the summer, Judge has played the role of Cabrera and Trout, chasing a Triple Crown while hurtling toward 10.0 WAR and beyond.

As of Sunday, Judge led Witt in Baseball-Reference WAR (bWAR, 9.6 to 9.1) and FanGraphs WAR (fWAR, 9.8 to 9.7). The margins are slim when considering the variance of the wins above replacement metric, yet when paired with his offensive fireworks and pursuit of 60 home runs, Judge is a heavy favorite in the betting markets and a virtual lock to take home his second MVP in three seasons.

The somewhat muted conversations over Judge vs. Witt — as well as Shohei Ohtani vs. Francisco Lindor in the National League — have illustrated a recent shift in Most Valuable Player voting, conducted each year by the Baseball Writers Association of America.

If Judge is crowned AL MVP, it will likely be the sixth time in seven years that the award goes to a position player with the most Baseball-Reference WAR. (It could also be the fifth time in seven years that the AL MVP is the leader in fWAR.)

Twelve years after Cabrera vs. Trout, the voting trends underscore an intriguing relationship between WAR and the MVP Award: Baseball writers have never been more educated on the merits, flaws and limitations of wins above replacement, an advanced metric with multiple forms that has revolutionized how the sport views overall value. And yet, they have also never been more likely to select an MVP who sits atop the WAR leaderboards.

In some ways, the relationship is simple enough: Gone are the days when MVPs won on the backs of RBI totals and puffed-up narratives. The advent of WAR offered a framework for value that has produced a smarter and more informed electorate. But as the MVP aligns closer and closer with the WAR leaderboards, it’s easy to wonder: Have MVP voters, in the aggregate, become too confident in WAR’s ability to determine overall value?

“If you’re a voter in a season like this and all you do before you cast your ballot is sort our leaderboards and grab the name at the top, I don’t think you’re doing your diligence,” Meg Rowley, the FanGraphs managing editor, said in an email. “First, that approach assumes a precision that WAR doesn’t have.”

Judge and Ohtani — who, in his first year in the NL, could be the first player with 50 homers and 50 stolen bases — both possess leads in bWAR that are well within the stat’s margin of error. (Lindor leads Ohtani in fWAR 7.4 to 6.9.)

Judge and Ohtani — who, in his first year in the NL, could be the first player with 50 homers and 50 stolen bases — are both overwhelming favorites to win, though both have advantages in bWAR that are well within the statistics’ margin of error. (Lindor leads Ohtani in fWAR 7.4 to 6.9.)

“No one should view a half a win difference as definitive as to who was more valuable,” Sean Forman, the founder of Baseball-Reference, said in an email.

For Don A. Moore, a researcher who studies biases in human decision-making, the prominence of WAR when it comes to voting could represent an example of “overprecision bias,” which is characterized by the excessive certainty that one knows the truth.

“Human judgment tends to reduce the complexity of the world by zeroing in on a favorite measure or an interpretation or an explanatory theory,” says Moore, a psychologist and professor at the Haas School of Business at UC Berkeley. “That leads us, so easily, to neglect the uncertainty and variability and imprecision.”

Moore’s focus is in the area of “overconfidence.” He is also a somewhat casual baseball fan with a soft spot for the “Moneyball” story.

“On the one hand,” he says, “it’s great if vague, subjective, potentially biased impressions can be clarified and improved by quantification.”

In this way, the development and acceptance of WAR stands as a triumph over the decision-making that ruled MVP voting for decades. But for an academic who sees overconfidence everywhere, his work also proffers a warning:

“It’s easy for us to zero in on some statistic and forget that it’s imprecise and noisy and there are other approaches,” Moore says. “So overprecision leads all of us to be too sure that we’re right and ask ourselves too little: What else might be right?”

The story of wins above replacement is really the story of baseball in the 21st century. So let’s do the short version: It began, roughly speaking, in the early 1980s with two pioneers of the sabermetric movement: Bill James and Pete Palmer.

James, the godfather of sabermetrics, was, at that point, utilizing a primitive concept of “replacement level” to rank players in his annual “Bill James Baseball Abstract.” Palmer, meanwhile, had introduced the system of “linear weights,” which determined an offensive player’s value in “runs” compared to a baseline average. By the 1990s, Keith Woolner built on the work and developed value over replacement player, or VORP, which was acquired and promoted by Baseball Prospectus. With the basic ideas in place, the refinement, innovation and improvement continued for another 15 years.

What emerged was a consensus: an all-encompassing metric that measured a player’s offense, defense and base running in “runs above replacement” and then converted that number into wins: WAR.

There was no official formula, which meant that sites such as Baseball Prospectus, Baseball-Reference and FanGraphs were free to develop their own versions. The crude nature of metrics for defensive and base running meant that WAR was often noisy in small samples. But the statistic presented a solution for one of baseball’s eternal problems.

“If you want to properly value defense, it’s hard to know how to do that unless you have a framework,” says Eno Sarris, a baseball writer at The Athletic and a past MVP voter. “How do I compare a shortstop who is 40 percent better than league average with the stick to a DH who is 70 percent better than league average with the stick? Without a framework, it’s guesswork.”

FanGraphs began publishing its WAR statistic on its website in December 2008, while Baseball-Reference unveiled its own WAR variation for the 2009 season (before a major overhaul in 2012). The metric’s public arrival offered more than just a better mousetrap. Both sites could retroactively calculate WAR for past seasons, which meant that past MVP votes were subject to historical peer review.

Willie Mays, for instance, led the National League in bWAR 10 times, often by significant margins. He won just two MVP awards.

“That seems like it’s backwards,” Sarris says.

In 1984, Ryne Sandberg won the MVP award, and also led the league in WAR. (Charlie Bennett / Associated Press)

To look back at the MVP votes of the past is to see a snapshot of what the sport valued at a given moment — and a number of surprising (and often contradictory) trends. Whether it was the Reds’ Joe Morgan in the 1970s, Robin Yount in 1982, Cal Ripken Jr. in 1983 or Ryne Sandberg in 1984, baseball writers often did reward players with versatile skill sets who led the league in bWAR (if anyone would have known how to calculate it). They also gave the award to relief pitchers three times from 1981 to 1992, while one of the most predictive stats for MVP Award winners was RBIs. From 1956 to 1989, the RBIs leader won the MVP 50 percent of the time in the NL and 47 percent of the time in the AL. (Since 1999, the NL RBIs leader has won the MVP just eight percent of the time while the AL RBIs leader has won the award 24 percent of the time.)

“It was very different,” says Larry Stone, a longtime columnist at the Seattle Times who started covering baseball in 1987. “I’m almost — not ashamed — but embarrassed. I think I just looked at the counting stats mainly — home runs, average and RBIs were huge. And often the tie-breaker was the team’s performance. There was not much sophistication back in those days.”

Of course, it was also true that sometimes the MVP was blatantly obvious no matter what statistics were used. When Barry Bonds won four straight MVPs in the early 2000s, he led the league in bWAR each time. When Albert Pujols broke Bonds’ streak in 2005, he, too, led the league in bWAR. As supporters of WAR often point out: The basic offensive numbers in the formula are the same ones we have measured for the last century.

The data on MVP voting, however, started to shift in the 2000s as WAR entered the public square. Noticing the trends, a baseball fan named Ezra Jacobson embarked on a project last winter to research the yearly difference between each league’s leader in bWAR and its MVP. Not surprisingly, he found the average had been shrinking for decades. In the 1980s and 90s, the average difference between the AL MVP and the leader in bWAR was 2.1 and 3.04 WAR, respectively. In the 2010s, the difference had dwindled to 0.9. In the 2020s, it’s 0.05.

Voters have become more informed and increasingly formulaic and uniform.

“I think the voting is massively improved from where it was,” says Anthony DiComo, who covers the Mets for MLB.com and has been an NL MVP voter. “Show me the MVP voting in recent history that was wrong? There have been some you could argue either way.

“If you go into the way past, there’s quite a few in history where you can say: ‘Geez, they got it wrong. This guy should not have been MVP.’ And I don’t think that really happens that much anymore.”

The voting electorate consists of two BBWAA members from each American League and National League city, creating a total of 30 writers for each league award. When Stone received his first ballot in the early 1990s, the letter included a list of five rules that had been on the ballot since 1931.

Voters were instructed to consider:

- Actual value of a player to his team, that is, strength of offense and defense.

- Number of games played.

- General character, disposition, loyalty and effort.

- Former winners are eligible.

- Members of the committee may vote for more than one member of a team.

If there was consternation over the rules, it usually came back to No. 1.

“The word ‘value’ is the one you ponder,” Stone says.

For decades, the vague nature of “value” allowed MVP voters to promote a host of different meanings. (Leave it to a group of writers to fuss over language.) Did an MVP have to come from a winning team? Or was the value actually in helping a team defy expectations? In 1996, the Rangers’ Juan Gonzalez won the AL MVP over Ken Griffey Jr. and Alex Rodriguez despite being worth just 3.8 bWAR — or more than 5.0 WAR less than Griffey and Rodriguez. This was, in part, because the Mariners teammates split some of the vote. But it was mostly because Gonzales helped the Rangers make the playoffs for the first time in franchise history.

“In the 90s,” said Tyler Kepner, a veteran baseball writer at The Athletic, “it always seemed to be: ‘Who was the best player on the team that seemed least likely to win going in?’ ”

When Bob Dutton, a former baseball writer at The Kansas City Star, became national president of the BBWAA in the late 2000s, he took on a research project to confirm the original intent of the word “value.” “It was always supposed to mean ‘the best player,’ ” he says.

WAR brought a framework for considering players in their totality. As a consequence, it has caused a generation of younger writers and voters to reframe the idea of value, separating it from team success. WAR has not just become a metric for determining value; it’s become synonymous with the idea. The evolution likely helped pitchers Justin Verlander and Clayton Kershaw win MVP Awards in 2011 and 2014, respectively: both pitchers led their leagues in WAR.

“It reflects the times we’re in,” Kepner said. “As front offices and the game itself values data more and more, it stands to reason that the voting would reflect that as well.”

Anecdotally, it’s nearly impossible to find an MVP voter who blindly submits a ballot copy and pasted from a WAR leaderboard. Considering there are multiple versions, that would be difficult. Rowley, the managing editor of FanGraphs, and Forman, the founder of Baseball-Reference, both emphasize that WAR should be a starting point in determining the MVP — not the finish line.

DiComo, a past voter, begins the process by compiling a spreadsheet with the top 10 in a number of statistics: weighted runs created plus, expected weighted on-base average, bWAR, fWAR, and win probability added. The first step creates a small pool of candidates. He supplements that with conversations with players, executives, managers and other writers. Then he might use other numbers as he ranks the top 10 on his ballot.

The goal, he said, is “to tease out a lot of my own biases that might exist without even knowing it.”

(Full disclosure: I voted for AL MVP in 2016 and 2017 and used a process roughly similar to this one.)

If there’s one significant difference in the voting process 30 years ago — beyond the information available — Stone notes that it used to be “more of a solitary exercise, which meant you couldn’t be influenced.” Not only are the WAR leaderboards public and updated daily, but individual MVP ballots are publicly released on the internet.

“I do worry about groupthink,” Stone says.

“My argument,” DiComo says, “is that we’ve gotten so good at measuring this, and voters tend to think about it more and more similarly. So it’s like: ‘Yeah, if there’s a small edge, in reality there’s a big edge in voting because everyone is seeing that small edge and voting for the guy who has it.’”

WAR has not remained static over the years; FanGraphs now uses Statcast’s defensive metrics in their WAR formula. Still, while defensive metrics have improved significantly from the early 2000s, they are still based on a sample of plays drastically smaller than, say, 700 plate appearances. Even as WAR improves and becomes ever more relied on, it remains only a partial measure.

Brown, the professor at UC-Berkeley, likened the flaws of WAR to economists using gross domestic product, or GDP, to measure economic growth.

“Everybody knows it’s woefully imperfect for capturing what we actually care about when it comes to economic growth,” Brown said of GDP. “But the thing is: It’s better than the alternatives. So we end up relying on it very heavily.”

The same can be said of WAR. It is not a perfect stat, but it is the best we have. Its creators and supporters are clear and explicit in explaining that a half win (0.5 WAR) is not statistically significant in determining which player had a more valuable season. But the thing is: Small margins are often determinative.

A decade later, the Trout-Cabrera MVP race might have gone a little differently. (Harry How / Getty Images)

Every MVP winner for the last decade has finished within 0.6 WAR of the league lead among position players at either Baseball-Reference or FanGraphs. The last MVP who did not: Miguel Cabrera in 2012.

Stone, who retired last year, had a vote that season. Cabrera was the first player to lead the league in batting average, home runs and RBIs since 1967. But Trout was so dominant in WAR that Stone was torn.

“I agonized over that,” he said. “I ended up voting for Cabrera, just because I thought the Triple Crown was such a monumental achievement.”

Cabrera received 22 of 28 first-place votes; Trout took the other six. But 12 years later, the winds have continued to change. If the vote happened today, Stone believes the result might be different.

“I think it might be closer,” he said. “I think there’s a chance that Trout might win.”

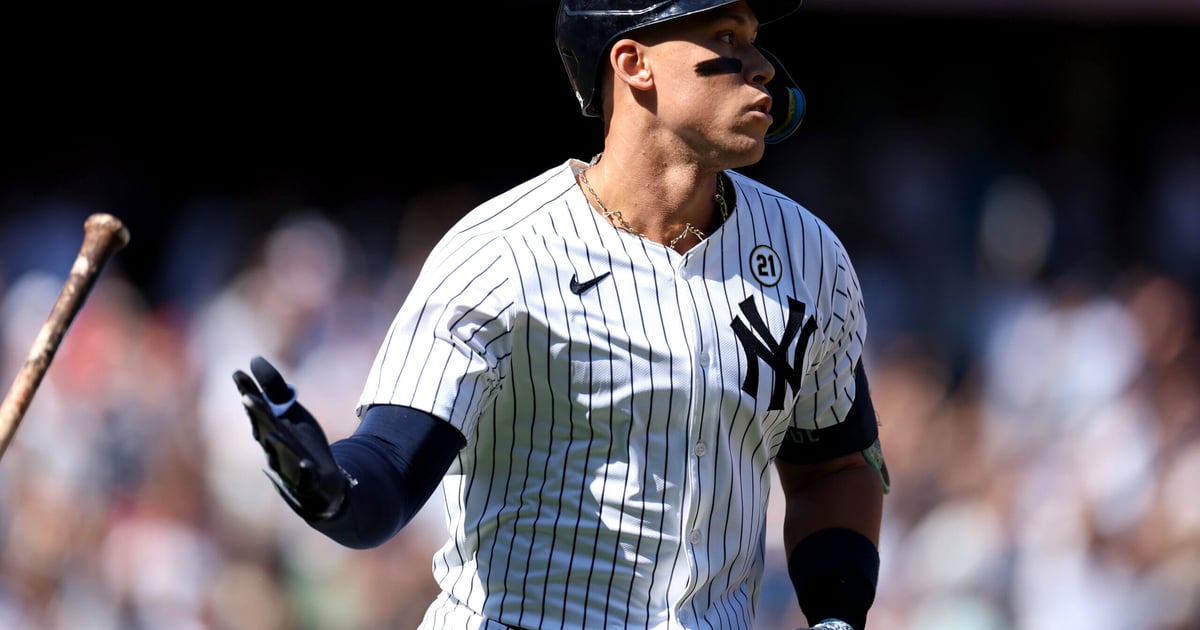

(Top photo of Judge: Luke Hales / Getty Images)