Meta AI can now talk to you with John Cena’s voice, but you can’t see him

Meta AI’s voice chat feature was finally introduced on Wednesday, after rumors about it emerged earlier this week. The social media giant announced several new artificial intelligence (AI) features for its native chatbot at Meta Connect 2024, with the voice chat feature being a major highlight. This allows users to have a two-way conversation with the AI without having to type or read text. However, it is not an emotional voice and instead the experience is similar to text-to-speech tools.

In a newsroom afterthe social media giant has detailed the feature. This was also shown during the event. Meta highlighted that the voice chat feature will be rolled out to Meta AI on Messenger, Facebook, Instagram DM and WhatsApp.

Simply put, this is a voice mode for the AI, similar to what companies like OpenAI and Google already offer with their chatbots. Unlike the advanced voice mode on ChatGPT, which has a human-like expressive voice, can respond to the user’s words and communicate in real time, Meta AI voice chat is more of a reading experience of generated text.

Judging from the demo shared by the company, the voice isn’t entirely robotic, but there is little to no voice modulation. However, one advantage is that it is available for free to all users. Meta also plans to introduce several new voice options for users to choose from. This also includes five celebrity voices, including Awkwafina, Dame Judi Dench, John Cena, Keegan Michael Key and Kristen Bell.

A report earlier this week revealed that Meta was planning to introduce celebrity voices for this feature film. The company is said to have made deals with them to use their voices for Meta AI. As such, more celebrities may also be added in the future.

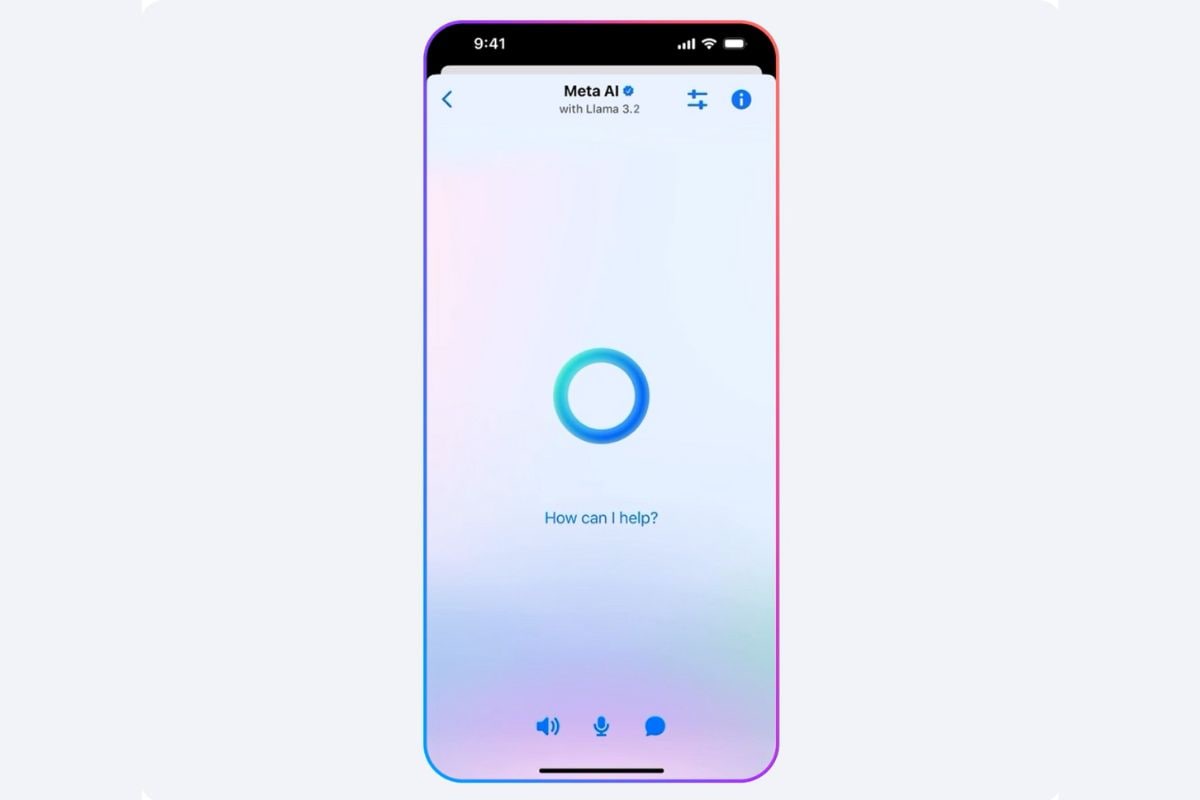

To use voice chat mode, users must go to the Meta AI interface on one of the Meta-owned platforms that support it. There users can find a waveform icon next to the text field. Tapping it will open a window with the Meta AI icon in the center and speaker, mute, and message icons at the bottom. Once here, users can start speaking their questions and directions, and the AI will respond verbally in the chosen voice.