Micron launches 36GB of HBM3E memory as it overtakes Samsung and SK Hynix as arch-rivals frantically move towards the next big thing: HBM4 with its 16 layers, 1.65 TBps bandwidth and 48 GB SKUs

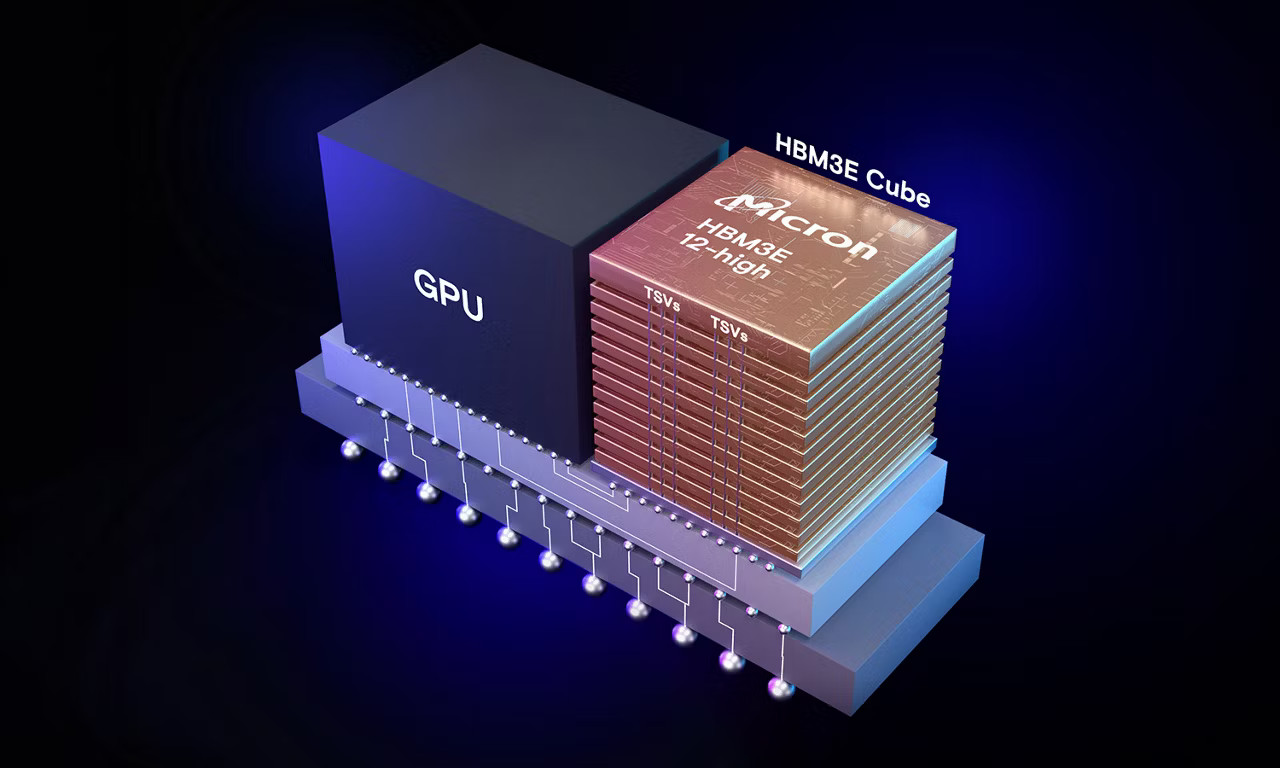

Micron has officially launched its 36GB HBM3E 12-high memory, marking the company’s entry into the competitive landscape of high-performance memory solutions for AI and data-driven systems.

As AI workloads become more complex and data-heavy, the need for energy-efficient memory solutions becomes paramount. Micron’s HBM3E aims to strike that balance and provide faster processing speeds without the high power demands typically associated with such powerful systems.

Micron’s 36GB HBM3E 12-high memory is notable for its increased capacity, as it offers a 50% increase in capacity compared to current HBM3E offerings. This makes it a critical component for AI accelerators and data centers that manage large workloads.

36 GB HBM3E 12-high memory for AI acceleration

Micron says its offering delivers more than 1.2 terabytes per second (TB/s) of memory bandwidth, with a pin speed of more than 9.2 gigabits per second (Gb/s), ensuring fast data access for AI applications. While Micron’s new memory addresses increasing demand for larger AI models and more efficient data processing, it also reduces power consumption by 30% compared to its rivals.

While Micron’s HBM3E 12-high memory delivers notable improvements in terms of capacity and energy efficiency, it enters a field where both Samsung and SK Hynix have already established a dominant position. These two rivals are aggressively pursuing the next big thing in high-bandwidth memory: HBM4.

HBM4 is expected to feature 16 layers of DRAM, offering more than 1.65 TBps of bandwidth, far exceeding the capabilities of HBM3E. Additionally, with configurations up to 48 GB per stack, HBM4 will provide even greater memory capacity, allowing AI systems to handle increasingly complex workloads.

Despite pressure from its competitors, Micron’s HBM3E 12-high memory remains a crucial player in the AI ecosystem.

The company has already started shipping production-ready units to key industrial partners for qualification, allowing them to integrate the memory into their AI accelerators and data center infrastructures. Micron’s robust support network and ecosystem partnerships ensure its memory solutions integrate seamlessly into existing systems, driving performance improvements in AI workloads.

One notable collaboration is Micron’s collaboration with TSMC’s 3DFabric Alliance, which helps optimize the production of AI systems. This alliance will support the development of Micron’s HBM3E memory and ensure it can be integrated into advanced semiconductor designs, further expanding the capabilities of AI accelerators and supercomputers.