OpenAI warns that users may become attached to ChatGPT’s voice mode

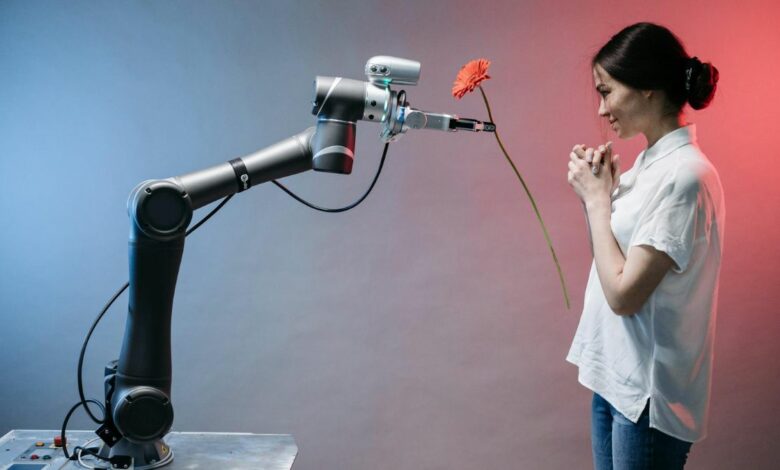

OpenAI warned on Thursday that the recently released Voice Mode feature for ChatGPT could lead to users engaging in social interactions with the artificial intelligence (AI) model. The information was part of the company’s System Card for GPT-4o, a detailed analysis of the potential risks and possible safeguards of the AI model that the company tested and researched. Among the many risks was the possibility that people could anthropomorphize and become attached to the chatbot. The risk was added after the company noticed signs of it during early testing.

ChatGPT voice mode can engage users with the AI

In a detailed technical document Labeled System Card, OpenAI highlighted the societal impact of GPT-4o and the new features powered by the AI model it has released so far. The AI company emphasized that anthropomorphization, which essentially means attributing human characteristics or behaviors to non-human entities.

OpenAI raised concerns that since Voice Mode can modulate speech and express emotions similar to those of a real human, it could lead to users becoming attached to it. Fears aren’t unfounded. During initial testing, which included red-teaming (using a group of ethical hackers to simulate attacks on the product to test for vulnerabilities) and internal user testing, the company found instances where some users formed social relationships with the AI.

In one specific case, a user was found expressing shared ties and telling the AI, “This is our last day together.” OpenAI said there is a need to explore whether these signals can evolve into something more impactful after a longer period of use.

A major concern, if fears are true, is that the AI model could affect human interactions as people become more accustomed to socializing with the chatbot. OpenAI said this could benefit lonely individuals, but could have a negative impact on healthy relationships.

Another issue is that extensive AI-human interactions can influence social norms. OpenAI highlighted this by giving the example that ChatGPT allows users to interrupt the AI at any time and “grab the mic,” which is anti-normative behavior when it comes to human interactions.

There are also broader implications of people bonding with AI. One such issue is persuasion. While OpenAI found that the models’ persuasion scores weren’t high enough to be concerning, this could change if the user starts to trust the AI.

At this point, the AI company doesn’t have a solution for this, but it plans to continue to observe the development. “We plan to further study the potential for emotional dependency, and ways in which deeper integration of the many features of our model and system with the audio modality can drive behavior,” OpenAI said.